Data quality management tools are specialized software designed to automatically find, fix, and monitor issues hiding in your business data. Think of them as expert inspectors and engineers for your company's information, making sure it’s accurate, complete, and trustworthy enough to build sound decisions on.

Why Your Business Foundation Depends on Data Quality

Imagine trying to build a skyscraper on a foundation of crumbling concrete and mismatched bricks. It doesn't matter how brilliant the architecture is—the entire structure is destined to fail. Your business data is that foundation. Every single marketing campaign, sales forecast, customer service interaction, and strategic decision is built right on top of it.

When that data is flawed—riddled with duplicates, missing information, or outdated entries—the consequences ripple outward. Marketing emails bounce, sales teams chase dead-end leads, and leadership ends up making critical choices based on a distorted view of reality. Poor data quality doesn't just create minor inconveniences; it actively undermines your growth and erodes customer trust.

The Shift from Manual Fixes to Automated Solutions

In the past, organizations relied on manual spot-checks and cumbersome spreadsheet formulas to clean their data. This approach was slow, prone to human error, and completely unsustainable in the face of modern data volumes. Today, businesses generate more information in a single day than they once did in an entire year, making manual oversight totally impossible.

This is where data quality management tools come in. They solve this scalability problem by automating the core tasks of data maintenance.

These platforms act as a vigilant, always-on system that profiles, cleanses, standardizes, and monitors your data around the clock. By handling the heavy lifting, they transform data from a potential liability into a reliable, strategic asset.

This growing reliance on automated solutions is reflected in the market's rapid expansion. The global data quality tools market was valued at around USD 1.66 billion and is projected to hit about USD 2.44 billion by 2032, growing at a compound annual rate of 18.20%. This growth isn't surprising; it's fueled by the need to manage immense data volumes while ensuring everything stays accurate and compliant.

Empowering Teams with Trustworthy Data

Ultimately, implementing a robust data quality management tool empowers every team in your organization.

- Sales teams can trust their CRM data to connect with the right prospects without wasting time.

- Marketing departments can personalize campaigns with confidence, knowing their customer information is accurate.

- Analysts and decision-makers can build forecasts and strategies based on a solid, factual foundation.

By establishing a single source of truth, these tools pave the way for more effective operations and smarter growth. Maintaining this clean foundation requires consistent effort, which is why understanding database management best practices is a critical first step.

The Six Pillars of High-Quality Data

Before you can get the most out of a data quality tool, you need a solid grasp of what "good" data actually looks like. It’s not just about whether information is correct; it’s a mix of six distinct dimensions. Once you understand these pillars, you can diagnose specific problems in your database and see exactly how software helps fix them.

Think of these six dimensions as the vital signs for your data. A doctor doesn't just check your temperature; they look at blood pressure, heart rate, and more to get a full picture of your health. A smart data quality strategy does the same, evaluating your information from multiple angles to make sure it's fit for decision-making.

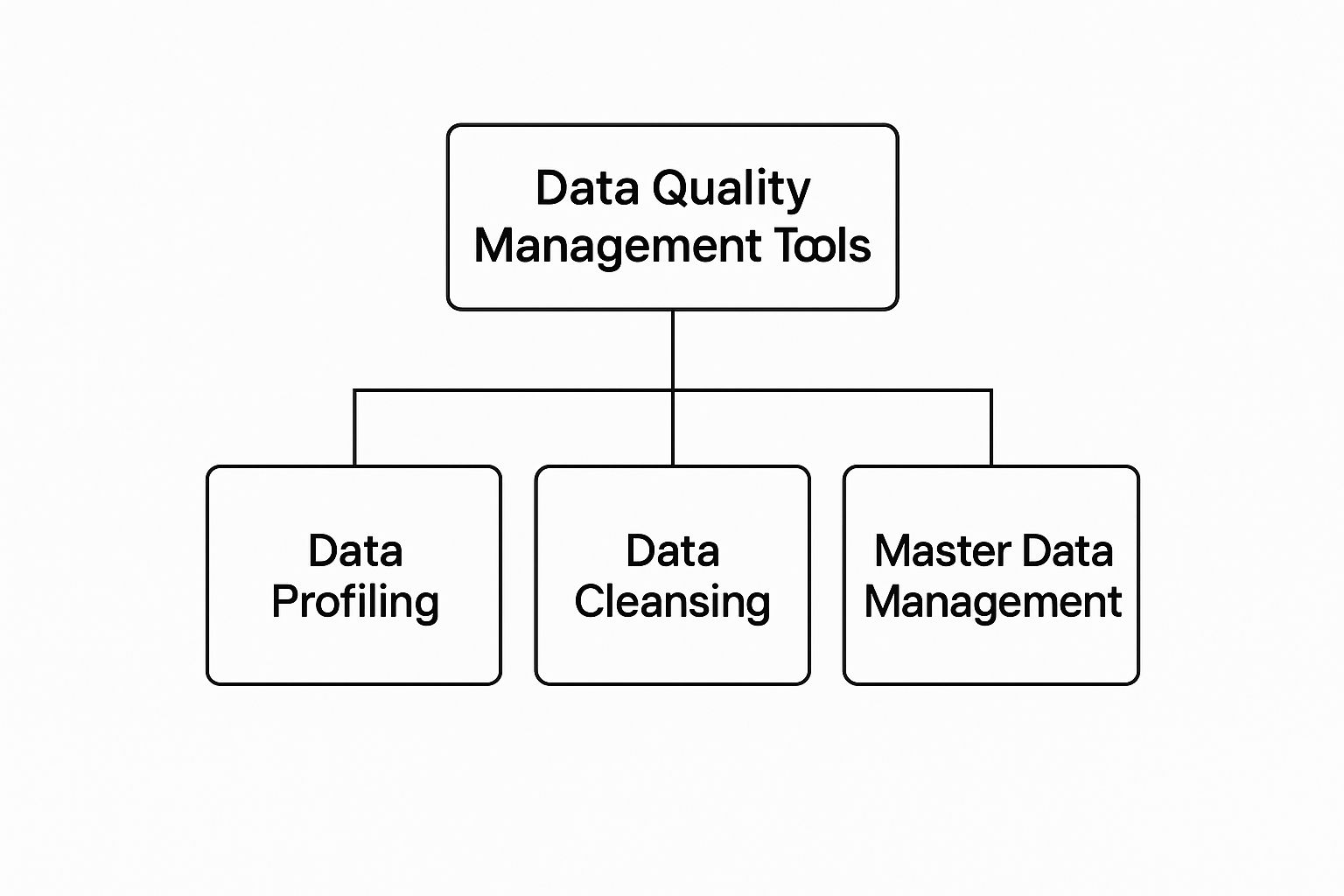

This visual breaks down how different tools tackle core functions like profiling, cleansing, and master data management.

As you can see, these tools serve different but connected purposes—from the initial analysis (profiling) and active correction (cleansing) all the way to strategic organization (master data management).

Core Dimensions of Data Quality Explained

To really pin this down, let's break down each of the six pillars. They all answer a simple, practical question about your data. Getting any of them wrong can have a surprisingly big impact on your business operations, from marketing campaigns to sales follow-ups.

| Data Quality Pillar | Simple Question | Business Impact of Poor Quality |

|---|---|---|

| Accuracy | Is the information correct? | A misspelled company name leads to a bounced email and a lost lead. |

| Completeness | Is all the necessary data here? | Missing shipping addresses mean you can't fulfill customer orders. |

| Consistency | Does this data match across systems? | Your CRM says "NY," but billing says "CA," causing sales confusion. |

| Timeliness | Is the information up-to-date? | Marketing to last year's customer interests results in a failed campaign. |

| Uniqueness | Is this the only record for this entity? | Duplicate customer records waste marketing spend and skew your analytics. |

| Validity | Does the data follow the right format? | A phone number field with "N/A" text breaks your automated dialing software. |

Each pillar addresses a unique vulnerability in your data. When they're all strong, you have a foundation you can trust to build reliable strategies and drive growth. Let's look a little closer at each one.

Pillar 1: Accuracy

This is the big one. Accuracy asks a straightforward question: is the information true? If a customer's name is spelled wrong or their phone number has a typo, the data is inaccurate. It’s that simple.

For instance, a sales rep relying on a CRM where a prospect's company is listed as "Acme Corp" instead of the correct "Acme Inc." is working with inaccurate data. This tiny mistake can lead to failed emails and a clumsy first impression.

Pillar 2: Completeness

Next up is Completeness. This pillar checks if all the critical data is actually there. Incomplete data is like a puzzle with missing pieces—you can’t see the full picture, and you definitely can't act on it.

Imagine an e-commerce database where 20% of customer profiles are missing their shipping addresses. The marketing team can't send them promotional mail, and the fulfillment team can't ship their orders. That’s a classic completeness problem that hits revenue directly.

Pillar 3: Consistency

Consistency makes sure your data doesn't contradict itself across different systems. If your CRM lists a customer in "New York" but your billing software has them in "California," you have an inconsistency problem.

This kind of conflict creates chaos. Inconsistent data forces your team to waste time hunting for the correct information, which delays decisions and opens the door for major errors.

These issues pop up all the time when data is stuck in separate, disconnected silos. A core job of data quality tools is to sync information across all your platforms to create a single source of truth everyone can rely on.

Pillar 4: Timeliness

Data has a shelf life. Timeliness is all about how up-to-date your information is, because data that was perfectly accurate six months ago might be useless—or even damaging—today.

Think about a marketing team launching a campaign based on customer behavior data from last year. People's needs and interests change fast. The campaign is almost guaranteed to fall flat. Timeliness ensures you're making decisions based on today's reality, not an old snapshot.

Pillar 5: Uniqueness

The fifth pillar, Uniqueness, is all about hunting down and eliminating duplicate records. When the same customer or product shows up multiple times in your database, it creates a mess.

- Wasted Money: Why send marketing materials to the same person five times? It’s inefficient and eats into your budget.

- Skewed Analytics: Duplicates inflate your customer count, leading to wildly inaccurate sales forecasts and reports.

- Bad Customer Service: A support agent can't see a customer's full history if it's scattered across three different profiles.

Pillar 6: Validity

Finally, Validity confirms that your data is in the right format. This isn't about whether it's correct, but whether it follows the rules. For example, an email address has to follow the name@domain.com structure to be valid.

If a field meant for phone numbers contains text like "Not Available," that data is invalid. It doesn't fit the required format, which means it’s useless for automated dialers or messaging platforms. Valid data ensures your systems can actually process and use the information without hitting a technical wall.

Key Features of Modern Data Quality Tools

Think of data quality management tools like a mechanic's toolbox. You wouldn't use just one wrench to fix an entire engine; you need a whole set of specialized instruments. Each has a specific job, and together, they keep everything running smoothly. These platforms aren't just one-trick ponies—they're a full suite of capabilities built to diagnose, repair, and maintain your data's health.

To really get a feel for how these tools turn messy, unreliable data into a trustworthy asset, let's break down their five most critical features. Each one handles a different stage of the data quality lifecycle, from the first look to ongoing protection.

Data Profiling: The Initial Health Check

Before you can fix a problem, you have to know what you're dealing with. Data profiling is that first step, acting like a diagnostic scan for your entire database. It automatically combs through your data to give you the lay of the land—uncovering its structure, content, and any hidden issues.

This feature gets to the bottom of critical questions like:

- What percentage of our customer records are missing a phone number?

- Are there weird spikes or outliers in last quarter's sales figures?

- Do our date formats stay consistent from one table to the next?

By generating a detailed report card on your data's condition, profiling gives you a clear roadmap for your cleanup efforts. It pinpoints exactly where the biggest fires are so you can direct your resources effectively.

Data Cleansing: Fixing The Errors

Once profiling has flagged the problems, data cleansing (often called data scrubbing) rolls up its sleeves and gets to work. This is the hands-on repair process where the tool corrects inaccuracies, standardizes formats, and fills in the gaps. It's where the real magic happens.

For instance, a cleansing function can automatically fix common typos in city names, standardize state abbreviations from "NY" and "N.Y." into a consistent "NY," or strip out special characters from phone number fields. This process makes sure your data isn't just accurate but also uniform and ready to be used by other systems.

Data Matching And Deduplication: Merging The Duplicates

Duplicate records are one of the most common—and costly—data quality headaches. They waste marketing spend, throw off your analytics, and create a confusing experience for your customers. Data matching and deduplication features are designed to hunt down and eliminate these redundant entries.

The tool uses smart algorithms to spot records that probably refer to the same person or company, even if the details aren't an exact match (like "Jon Smith" vs. "Jonathan P. Smith"). It then merges them into a single, comprehensive "golden record" that represents the most accurate version of the truth.

This is fundamental for building a reliable single customer view, which is absolutely essential for personalized marketing and effective sales outreach.

Data Monitoring: The 24/7 Watchdog

Data quality isn't a one-and-done project; it’s an ongoing commitment. Data monitoring acts like a perpetual watchdog, constantly scanning your data for new issues as they pop up. You set the rules, and it keeps an eye on things for you.

If a new entry is added with an invalid email format or a sales figure falls way outside the normal range, the tool can flag it in real-time. This proactive approach stops bad data from ever entering your systems, preventing small mistakes from snowballing into massive problems. It’s the difference between fire prevention and firefighting.

Data Enrichment: Filling In The Blanks

Sometimes, your data isn't wrong—it's just incomplete. Data enrichment takes your existing records and makes them better by adding valuable information from trusted third-party sources. This could mean appending missing demographic data, industry codes, or even social media profiles to your customer records.

A perfect example is improving contact data. An enrichment process might use an email validation API to confirm that email addresses are not only formatted correctly but are actually deliverable, giving your campaign performance a serious boost.

One of the biggest leaps forward has been the integration of artificial intelligence (AI) and machine learning (ML) into these tools. These technologies automate complex tasks like profiling and cleansing at a massive scale. As recent market analysis points out, intelligent algorithms can scan huge datasets for inconsistencies proactively, helping businesses maintain high data reliability with much less manual effort. You can find more details on this trend and its market impact in this industry report from IMARC Group.

How to Choose the Right Data Quality Tool

Picking the right data quality management tool can feel overwhelming. With so many options out there, each promising to solve all your problems, the secret is to tune out the sales pitches and get crystal clear on what your organization actually needs.

A tool that’s perfect for a small e-commerce startup will almost certainly be the wrong fit for a multinational bank. The goal isn’t to find the best tool—it's to find the best tool for you. That means aligning it with your specific data, your tech stack, and your business goals.

Before you even think about scheduling a demo, you need to do a thorough self-assessment. This is about creating a sharp, honest picture of your data ecosystem—its scale, its complexity, and how information flows through it. Think of it as a diagnosis. A clear diagnosis will be your compass, pointing you toward a tool that solves your real-world problems.

Evaluate Your Core Business Needs

First things first: what does success actually look like? A good data quality tool should be a direct line to hitting your business objectives.

So, ask yourself what you’re really trying to achieve. Are you hoping to slash the number of bounced marketing emails? Do you need to nail your sales forecast accuracy? Or is this all about staying on the right side of regulatory compliance?

Once you have those goals in mind, map them back to your data.

- Data Volume and Velocity: How much data are we talking about? A small business with a few thousand customer records has entirely different needs than an enterprise processing millions of transactions every second. Be realistic about your scale.

- Data Sources and Complexity: Is your data sitting neatly in one CRM? Or are you pulling it from dozens of places like databases, APIs, and random flat files? The more complex your setup, the more you need a tool with serious integration muscle.

- Team Skillset: Who’s going to be using this thing? If it’s a team of hardcore data engineers, you can go with something technical. But if business analysts or marketing managers need to run their own quality checks, you’ll need a user interface that doesn’t require a computer science degree.

Cloud Based Versus On-Premise Solutions

One of the biggest forks in the road is deciding where the tool will live. Do you want a cloud-based Software-as-a-Service (SaaS) solution, or an on-premise tool installed on your own servers? Each has its pros and cons.

On-premise tools give you maximum control and security, which is often non-negotiable for industries with strict data privacy rules, like finance or healthcare. The trade-off? They come with hefty upfront costs for hardware and require a dedicated IT team to keep them running.

On the other hand, cloud-based solutions offer flexibility, scalability, and a much lower initial investment. You pay a subscription, and the vendor handles all the messy backend stuff—infrastructure, security, and updates. This frees up your team to focus on actually using the tool, not maintaining it.

The market has already voted with its feet. Industry analysis shows that cloud-based models now make up about 64% of total market revenue and are growing at a rate of over 20% annually. It’s a clear sign that businesses prefer the agility and cost-effectiveness of the cloud. You can dig into more of this data in a detailed report on Mordor Intelligence.

Create Your Feature Checklist

Okay, now it’s time to get practical. Based on everything you’ve figured out so far, create a simple checklist of "must-have" versus "nice-to-have" features. This little list will be your best friend when you start looking at different products, keeping you focused on what truly matters.

Essential Features to Consider:

- Data Profiling: Can the tool automatically scan your data and find hidden problems before you even start the cleanup process?

- Cleansing and Standardization: How well does it fix errors and standardize formats? Think addresses, phone numbers, and custom business rules.

- Deduplication Capabilities: How smart is its matching logic? You need something that can spot duplicates that aren't exact matches, like "John Smith" vs. "J. Smith."

- Real-Time Monitoring: Can it watch your data as it comes in? Catching bad data at the source is always better than cleaning it up later.

- Connectivity and Integration: Does it play nicely with your existing systems? Look for pre-built connectors for your CRM, ERP, and marketing automation platforms.

By working through these steps—figuring out your needs, picking a deployment model, and making a feature checklist—you can cut through the noise and confidently choose a data quality tool that will deliver real, measurable results for your business.

Implementing Your Data Quality Strategy

A powerful tool is only as good as the strategy behind it. Just installing sophisticated data quality management tools without a clear plan is like handing a professional camera to someone who's never taken a picture. You might get a lucky shot, but you won't get consistent, high-quality results. Real success comes from building a robust program from the ground up.

This isn't just a technical fix; it's a strategic shift. It’s about getting everyone on the same page, from the C-suite to the teams using the data every single day. A successful implementation creates a lasting culture of data excellence, not just a one-time cleanup project.

Secure Leadership Buy-In

Before you do anything else, you need support from the top. Getting leadership on board isn’t just about securing a budget—it's about making data quality an organizational priority. You have to frame the initiative in terms of business outcomes, not technical jargon.

Show them the numbers. Explain how poor data leads to wasted marketing spend, inefficient sales cycles, and flawed business intelligence. Research shows that bad data can cost companies an average of $12.9 million annually. When you present a clear ROI, the investment becomes a logical business decision, not just another IT expense.

Define What Good Data Means

"Good data" isn't a universal concept. Its definition changes depending on your business goals. For a sales team, it might mean having an accurate phone number and company size for every lead. For marketing, a valid, deliverable email address is everything.

Work with different departments to create clear, measurable data quality metrics. These standards become the rules your new tool will enforce.

- Sales: A lead record is "complete" only if it includes a name, company, title, and verified phone number.

- Marketing: An email contact is "valid" only if it passes verification and hasn't hard-bounced in the last 90 days.

- Operations: A customer address is "accurate" only if it matches official postal service records.

Defining these rules upfront gives your data quality initiative a clear direction. It transforms a vague goal like "improve our data" into a concrete set of measurable actions that directly support business operations.

Assign Clear Ownership Roles

Data quality is a team sport, but every team needs a captain. Assigning clear roles prevents the "not my problem" mentality from creeping in. A data steward is a person or team responsible for overseeing the quality of a specific data set, like customer or product data.

These stewards don't fix every error themselves. Instead, they champion the data quality rules, monitor the dashboards in your tool, and coordinate with other teams to resolve tricky issues. This creates a system of accountability that is absolutely essential for long-term success.

Start with a Pilot Project

Trying to boil the ocean is a recipe for failure. Instead of a massive, company-wide rollout, start small with a focused pilot project. Choose a single, high-impact area where bad data is causing obvious pain. A common starting point is cleaning the contact list for an upcoming marketing campaign. For more guidance on this initial phase, you can learn about the essentials of the email list cleaning process.

A successful pilot project does two critical things. First, it lets you learn the new tool in a low-risk environment. Second, and more importantly, it generates a quick, tangible win you can show to the rest of the organization. That early victory builds momentum for a much broader implementation.

Data Quality Tools in the Real World

It’s one thing to talk about data quality in the abstract, but seeing these tools in action is where their value really clicks. The ROI isn’t just a number on a spreadsheet—it’s fewer returned packages, safer patient care, and rock-solid regulatory compliance. The right tool translates directly into tangible business wins.

Let's look at how a few different industries are using data quality tools to solve specific, high-stakes problems. These examples show how the features we've discussed deliver real cost savings, better efficiency, and stronger customer trust.

E-commerce: Slashing Costly Shipping Errors

An online retailer processing thousands of orders a day is in a constant fight against bad addresses. A single typo in a street name or zip code means a failed delivery, an unhappy customer, and paying for reshipment. This is a classic data accuracy problem that quietly drains revenue.

By bringing in a data quality tool, the company can automate address verification right at the checkout.

- Real-time Cleansing: As customers type, the tool automatically corrects misspellings and standardizes addresses to match official postal formats.

- Data Enrichment: It can even fill in missing details like apartment or suite numbers, closing the loop on potential delivery failures.

The result? A massive drop in shipping errors, leading to significant cost savings and a far better customer experience. Clean address data is the foundation of a reliable fulfillment machine.

Healthcare: Ensuring Accurate Patient Records

In a hospital, a duplicate patient record isn't just an annoyance; it's a genuine safety risk. If a patient has files under both "John Smith" and "Jonathan Smith," their medical history, allergies, and prescriptions get split apart.

Healthcare providers use data quality tools to solve this with powerful deduplication features.

The software’s matching algorithms intelligently find and flag potential duplicates for a data steward to review. Once confirmed, these scattered files are merged into a single, comprehensive "golden record." This ensures doctors have the full picture every single time.

This simple fix dramatically improves the quality of care and helps prevent dangerous medical mistakes.

Financial Services: Nailing Regulatory Demands

Banks and financial firms operate under intense regulatory scrutiny. They have to provide squeaky-clean reports to government bodies, and a single mistake can lead to eye-watering fines. This makes data monitoring non-negotiable.

These institutions use data quality tools as a 24/7 watchdog over their transaction data. They set up automated rules that constantly scan for anomalies, inconsistencies, or missing fields needed for reports. If a record fails a check, an alert goes straight to the compliance team for immediate action. On the marketing side, teams here also use verification to check if an email exists before it ever enters their CRM, ensuring their outreach data is top-notch from day one.

Data Quality Tool Applications Across Industries

Data quality isn't a one-size-fits-all solution. Different industries prioritize different features to solve their most pressing challenges. From manufacturing to retail, the goal is always the same: turn messy data into a reliable asset.

The table below offers a few snapshots of how these tools are applied across various sectors to drive real business results.

| Industry | Primary Use Case | Key Business Benefit |

|---|---|---|

| Retail | Standardizing customer addresses and contact info. | Reduced shipping errors and improved marketing personalization. |

| Manufacturing | Cleansing and enriching supplier and parts data. | Optimized supply chain and reduced procurement costs. |

| Telecommunications | Validating customer data for billing and service delivery. | Fewer billing disputes and higher customer satisfaction. |

| Insurance | Deduplicating policyholder records to prevent fraud. | Lower financial risk and more accurate underwriting. |

| Government | Ensuring accurate citizen data for public services. | More efficient service delivery and better resource allocation. |

As you can see, the applications are incredibly diverse, but the outcome is consistent: better data leads to better decisions, smoother operations, and a stronger bottom line.

Frequently Asked Questions

When you start digging into data quality management tools, a lot of questions come up. We've put together answers to some of the most common ones to give you a clearer picture of how these tools work and where they fit into your strategy.

How Often Should We Check Our Data Quality?

The best way to think about data quality is as a continuous, ongoing process, not a one-time project. It's tempting to schedule a big cleanup every quarter, but the reality is that data goes stale fast. Customers move, companies change names, and new information is flooding your systems every single day.

That’s why modern data quality tools are built for real-time (or close to it) monitoring.

Think of it like your home security system. You don’t just check the locks once a month; the system is always on, watching for issues as they happen. In the same way, the most effective strategy is to have your tool constantly monitoring data as it enters your systems, stopping bad data before it ever takes root.

This proactive approach keeps small errors from snowballing into massive, expensive problems down the line.

Can These Tools Fix Every Data Problem Automatically?

While automation is a massive strength of data quality tools, they aren't a "magic bullet" that fixes every problem without any human input. They are incredibly good at handling repetitive, rule-based tasks with speed and accuracy that a human could never match.

For instance, a tool can automatically:

- Correct misspelled state names.

- Standardize all your jumbled date formats.

- Flag duplicate records that are almost certainly the same person or company.

But the more complex or nuanced issues still need a human brain. A tool might flag "Jon Smith" and "Jonathan P. Smith" as potential duplicates, but a data steward will likely need to make the final call on whether to merge them. The goal is to automate around 80% of the grunt work, freeing up your team to focus on the critical 20% that requires actual thinking.

What Is the Difference Between Data Quality and Data Governance?

It’s really easy to mix these two up, but they play very different—though connected—roles. The easiest way to think about it is like building a house.

- Data quality is the hands-on work of the builders. They’re the ones laying the foundation, making sure the walls are straight, and checking that the wiring is done right. It's the practical, in-the-weeds process of cleaning, standardizing, and monitoring the data itself.

- Data governance is the architect’s blueprint. It’s the high-level strategy, the set of rules, and the policies that define how data should be managed across the entire company. It answers questions like, "Who is responsible for this data?" and "What are the official rules for using it?"

Simply put, data governance sets the rules of the game, and data quality management tools are what you use to actually play by them.